Presenters

Source

Stripe’s Secret Weapon: How Apache Spark Fuels Bulletproof Payment Systems 🚀

Ever wondered how companies like Stripe, which handle a mind-boggling amount of financial transactions every second, ensure their systems are absolutely rock-solid? It’s not just about clever coding; it’s about innovative testing strategies that go above and beyond. In a recent illuminating presentation, Vivek Yadav, an Engineering Manager at Stripe, pulled back the curtain on a rather unconventional yet incredibly effective use of Apache Spark – not for its usual analytical wizardry, but for something far more critical: large-scale regression testing and “what-if” scenario analysis for their core payment systems. Prepare to be amazed as we dive into how Stripe is leveraging this powerful tool to achieve unparalleled system integrity.

The Ever-Present Challenge: Migrating with Confidence ⚡

In the fast-paced world of tech, systems aren’t static. Business logic evolves, scalability demands increase, and sometimes, a complete rewrite is the only path forward. For a payment giant like Stripe, these migrations are high-stakes operations. The absolute paramount goal is to ensure that the new system behaves identically to the old one, from input to output, without a single hiccup for users. And crucially, it must be able to handle even more load.

The real kicker? To guarantee this safety, especially in the sensitive realm of financial transactions, they need to test new code against years of historical data. Imagine the sheer volume and complexity! Traditional testing methods simply couldn’t keep pace.

The Spark of Genius: Repurposing Apache Spark for Testing 💡

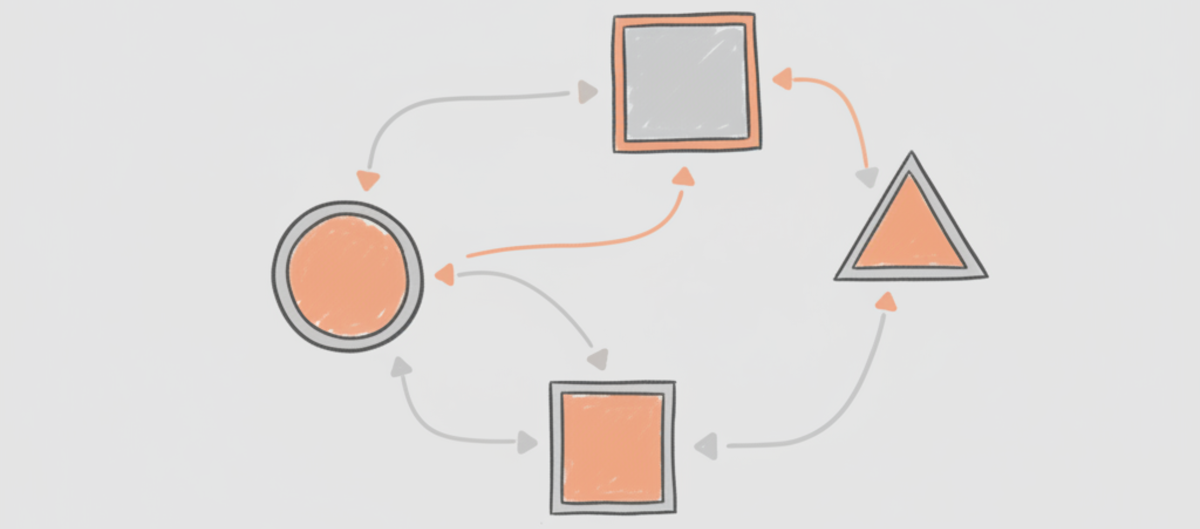

This is where Stripe’s ingenuity shines. Instead of the laborious process of running historical data through a live service, the team conceptualized their services differently. They viewed them as a series of input/output operations and business logic. This perspective perfectly aligns with how Apache Spark operates: reading data in bulk, applying transformations, and writing it back out.

Key Pillars of the Spark Testing Framework 🛠️

The magic lies in a few fundamental principles and their clever implementation:

- Code as a Reusable Library 📚: The bedrock of this approach is structuring service logic as a modular, reusable library. This library exposes methods that can be called by various “I/O wrappers.”

- Flexible I/O Layers 🌐: The same core logic library can then be adapted. One wrapper can make it function as a real-time service, handling individual requests and responses. Another wrapper can transform it into a Spark job, capable of processing massive datasets in bulk from distributed storage like S3 or HDFS.

- Harnessing Spark’s Parallel Power 🚀: By tapping into Spark’s distributed computing capabilities, Stripe can process years of historical data in a matter of hours. This is a monumental achievement that traditional database-centric testing would find nearly impossible.

- S3: The Data Backbone 💾: The strategy heavily relies on data being accessible in S3. This is a natural fit for Stripe, as they already maintain cold storage copies of their data for analytical purposes.

- The Regression Replay 🔄: The core regression testing involves replaying historical production requests. The new code processes these requests, generating new responses. These new responses are then meticulously diffed against the original production responses. Custom diff jobs pinpoint any discrepancies, allowing for iterative code fixes until perfection is achieved.

Beyond Regression: The Power of “What-If” Scenarios 📊

But Stripe’s innovation doesn’t stop at just ensuring things work as they always have. Spark’s processing power unlocks incredible possibilities for predictive analysis:

- Simulating Future Impacts 📈: Imagine wanting to understand the financial implications of a change in network costs from Visa or Mastercard. By simply modifying configurations within the Spark job, Stripe can run “what-if” scenarios. This allows them to project the impact of these external rule changes on user billing and their own internal costs, providing invaluable foresight to finance and product teams.

The Transformative Impact: Confidence, Speed, and Savings ✨

This unconventional application of Spark has yielded remarkable benefits:

- Skyrocketing Developer Confidence 👨💻: With millions of transactions already tested against new code, developers and reviewers can approach code quality with immense confidence. The focus shifts from hunting for bugs to refining the code itself.

- Drastic Reduction in Production Issues 🎯: This layered testing approach significantly minimizes the number of regressions that make it to production, a substantial leap forward from relying solely on unit and integration tests.

- Lightning-Fast Feedback Loops ⚡: For minor code adjustments, Spark jobs can complete in minutes, offering developers a rapid feedback loop. This even integrates seamlessly with pull request workflows.

- Unbeatable Cost-Effectiveness 💰: Compared to the astronomical costs of scaling databases to handle massive historical data testing, the Spark-based approach is significantly more economical, thanks to leveraging existing infrastructure and Spark’s inherent efficiency.

- Bridging Engineering and Business 🤝: The “what-if” testing capabilities empower business teams with data-driven projections, fostering a deeper understanding of the financial ramifications of external factors.

Navigating the Nuances: Scope and Limitations 🧭

While incredibly powerful, this approach isn’t a one-size-fits-all solution. It’s best suited for:

- JVM-Centric Services ☕: Services written in JVM languages like Scala or Java are ideal candidates.

- Data Readily Available in S3 ☁️: The efficiency is maximized when data is easily accessible in S3.

- State-Less or Self-Contained Logic 🧩: The Spark-based testing is most effective for services with logic that doesn’t require extensive lookups from other external services. Think calculations based on predefined rules, like network costs or billing amounts.

The speaker wisely emphasizes that this approach requires careful consideration of the specific service’s architecture and data storage.

The Road Ahead: Evolving for Complexity 🚀

Even with its impressive success, there’s always room for growth. Future enhancements are being explored to better handle stateful scenarios, potentially integrating state management more directly into the Spark processing pipeline for even more complex request dependencies.

Key Takeaways: The Stripe Spark Blueprint 💡

Stripe’s journey with Apache Spark for regression testing offers profound lessons for the tech community:

- Repurpose and Innovate: Powerful analytical tools like Apache Spark can be creatively adapted for critical engineering tasks.

- Code Design Matters: Structuring code as reusable libraries is fundamental to enabling flexible and robust testing strategies.

- Data Infrastructure is Key: Leveraging existing data infrastructure, like S3, significantly reduces implementation costs and complexity.

- Beyond Basic Testing: The framework extends far beyond simple regression, offering powerful “what-if” analysis that bridges engineering and business needs.

- Cost-Benefit is King: The decision to use Spark was driven by its superior cost-effectiveness and efficiency compared to scaling databases for massive data processing.

- Scale is Everything: Stripe operates at an immense scale, handling hundreds of billions of rows and terabytes of data, underscoring the need for such robust solutions.

Stripe’s innovative use of Apache Spark is a testament to their engineering prowess and their unwavering commitment to building secure, reliable, and future-proof payment systems. It’s a compelling reminder that sometimes, the most groundbreaking solutions come from looking at existing tools with a fresh, imaginative perspective.