Presenters

Source

🚀 Level Up Your Deployments: Introducing AI-Powered Canary Deployments 🤖

Deploying new features can be nerve-wracking. That moment of truth when you push code live – will it break everything? What if it introduces a critical bug impacting thousands of users? Thankfully, there’s a safer way. This post dives into a fascinating approach: AI-powered canary deployments. Buckle up, because we’re about to explore how AI can revolutionize your deployment pipeline!

The Canary Deployment Problem & Its Evolution 🐛➡️🕊️

Traditionally, deployments follow a “big bang” approach – releasing changes to all users at once. This is risky. A safer alternative is the canary deployment. Imagine releasing a new version of your app to a small group of users – the “canary.” You monitor their experience, looking for issues. If everything looks good, you gradually roll out the new version to more users.

But managing canary deployments manually can be tedious and time-consuming. That’s where things get really exciting: combining canary deployments with the power of Artificial Intelligence (AI).

Enter AI: Automating the Canary Process 💡

The core concept is simple: use AI, specifically Large Language Models (LLMs), to automate the entire canary deployment process. This isn’t just about monitoring metrics; it’s about having an intelligent agent that can:

- Analyze deployment behavior in real-time.

- Identify and resolve issues automatically.

- Create GitHub issues to track problems.

- Even generate pull requests for fixes!

The Tech Stack: Building the Intelligent Pipeline 🛠️

So, how do we actually build this AI-powered canary deployment system? Here’s a look at the key components:

- Argo Rollouts: This is your foundation. Argo Rollouts is a Kubernetes controller that manages canary deployments, allowing you to define rollout steps (e.g., 20% traffic to canary, 40%, and so on) and conditions for progression. Labels are crucial here for routing traffic correctly.

- Custom Argo Rollout Plugin: This is where the AI magic happens. This

plugin integrates LLMs and agents into the Argo Rollout process. It works

by:

- Analyzing the canary’s performance using an LLM, guided by a specific prompt that dictates the analysis and response format.

- Automatically generating GitHub issues when problems are detected.

- Supporting an “agent mode” powered by A2A (Agent to Agent), allowing for more complex, chained automation tasks.

- GitHub Integration: Seamlessly creates issues and pull requests, streamlining the debugging and fixing process.

- A2A (Agent to Agent): Enables chaining multiple AI agents together to tackle more complex tasks.

The Benefits: Why Should You Care? ✨

This approach isn’t just about fancy technology; it delivers real-world benefits:

- Reduced Risk: Safer deployments through gradual rollout and automated issue detection. Fewer late-night fire drills!

- Increased Efficiency: Automates tedious tasks, freeing up engineers to focus on more strategic work.

- Continuous Improvement: Automated issue resolution allows for faster iteration and a more robust product.

- The “Robot Overlords” (Just Kidding!): The idea of machines handling repetitive tasks, allowing humans to focus on higher-level concerns – a truly exciting prospect.

The Agent Loop: The Secret Sauce 🌐

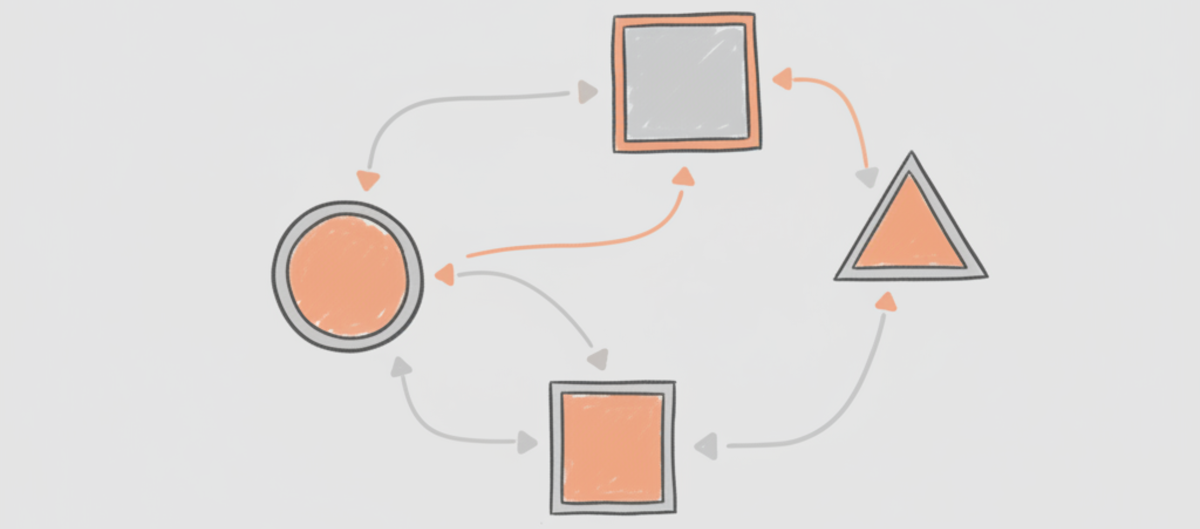

The real power lies in what’s called The Agent Loop. It’s a closed-loop system where AI agents continuously monitor, analyze, fix, and deploy. This isn’t a one-and-done process; it’s a cycle of continuous learning and improvement. The AI learns from its mistakes, and the deployment pipeline becomes increasingly self-healing.

Code & Further Exploration 💾

While explicit URLs weren’t provided, resources and code repositories related to Argo Rollout and the plugin are available for those eager to delve deeper into the technical implementation.

Key Takeaways & Future Directions 📡

AI-powered canary deployments represent a significant leap forward in software deployment. While the technical details can be complex, the potential benefits – reduced risk, increased efficiency, and continuous improvement – are undeniable. Imagine a future where your deployment pipeline is not just automated, but intelligent, constantly learning and adapting to ensure a smooth and reliable user experience. That future is closer than you think!

Note: The presentation was dense with technical jargon and lacked visuals. A live demo would have significantly enhanced the understanding of this exciting new approach.