Presenters

Source

🚀 Level Up Your AI: Harmonising LLM Agents for Real-World Market Intelligence 🌐

Hey everyone! 👋 I’m Uluatin Oadun, a “data and AI explorer,” and I’m thrilled to share a technique I’m passionate about: Harmonising LLM agents for real-world market intelligence. We’re moving beyond isolated AI outputs to structured, aligned decisions – and it’s a game-changer.

For those who caught my presentation at API Ideas in London, this is a deeper dive. For everyone else, let’s explore how we can harness the power of AI while mitigating the chaos that can arise when multiple agents disagree.

The Problem: Why Isolated AI Agents Can Lead to Confusion 🤯

We’re all familiar with Large Language Models (LLMs) like GPT and Claude. When wrapped with tools, memory, and structured output, they become powerful AI agents. But here’s the rub: when these agents work in isolation, they can give conflicting answers.

Imagine you’re trying to decide whether to invest in a stock. You ask three agents: a financial analyst, a regulatory expert, and a sentiment tracker. The financial agent says “buy!”, the regulatory expert flags the investment as too risky, and the sentiment tracker reports mixed public opinion.

Which do you trust?

This isn’t a hypothetical problem. It’s a reality when relying on multiple AI voices without a process for alignment. That’s where Decision Alignment Protocols (DAPs) come in.

Introducing DAPs: Bringing Structure to AI Decision-Making ✨

DAPs are designed to move us from noise to structure in AI decision-making. Think of it as a framework for orchestrating your AI agents, ensuring they work together rather than at cross-purposes.

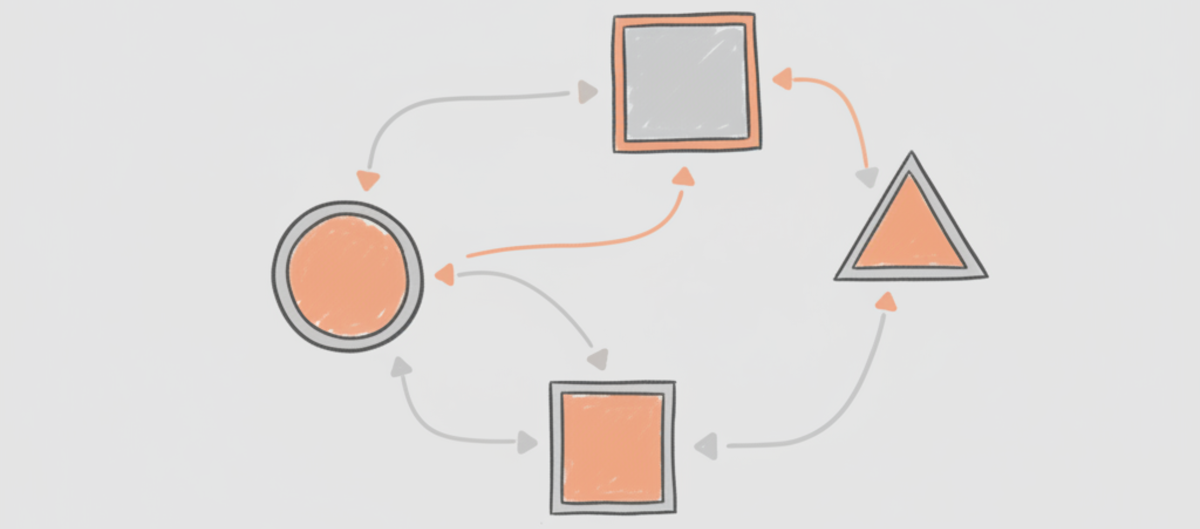

Here’s the core process:

- Independent Insights: Agents gather data and generate initial responses.

- Conflict Identification: An LLM (like Grok) compares responses and flags any conflicts.

- Structured Negotiation/Voting: Responses are ranked and scored.

- Aligned Output: The best option is chosen, or multiple perspectives are synthesized into a unified recommendation.

Essentially, DAPs bring a structured debate and peer-review process to AI decision-making.

How DAPs Work: A Multi-Perspective Framework 🛠️

DAPs utilize a multi-perspective framework, broken down into three key stages:

- Intracluster Consensus: Agents within the same domain (e.g., two financial analysts) align their views first.

- Intercluster Negotiation: Different domains (finance, regulation, sentiment) hash out their conflicts and discuss how to move forward.

- Final Ammonization: Weights are applied based on track record or confidence levels, resulting in a single, clear decision.

A crucial component is confidence scoring. Each agent’s output is assigned a score reflecting its reliability. This ensures stronger signals carry more influence.

Real-World Impact: DAPs in Action 🎯

Let’s say you’re deciding whether to invest in a stock. DAPs facilitate a structured debate:

- Agent 1 says, “Competitor prices are dropping 5%.”

- Agent 2 says, “Premium products are trending up.”

- Agent 3 says, “Overall demand is forecast to rise 3%.”

The system flags the potential conflict between dropping prices and rising demand. Instead of discarding one opinion, DAPs factors both perspectives and applies weighting rules (perhaps giving more weight to demand data) to generate a unified recommendation: “Overall demand will grow even with pricing measures.”

The Numbers Don’t Lie: DAPs Deliver 📊

Testing has shown impressive results:

- 99.7% Alignment Achieved: A significant improvement in consistency.

- 91.2% Accuracy vs. 85.1% Baseline: DAPs demonstrably improve accuracy.

- Sustained Accuracy Advantage (6.1%): Consistent performance over 24 months.

- Robustness: DAPs perform well, even in volatile markets and amidst regulatory shifts.

- Multi-Dimensional Risk Management: DAPs outperform traditional systems in risk assessment.

Limitations and Considerations ⚠️

While powerful, DAPs aren’t a magic bullet.

- Increased Complexity: Managing multiple agents adds complexity.

- Potential for Bias: Weighting rules must be carefully designed to avoid introducing bias.

- Quality of Underlying Models: DAPs amplify the quality (or lack thereof) of the AI models they’re using.

- Guardrails Needed: Even with DAPs, careful design and governance remain essential.

The Future is Aligned 🌐

DAPs represent a crucial step towards harnessing the full potential of AI for real-world decision-making. By moving from isolated reasoning to collaborative, aligned outputs, we can build trust, structure, and quality into our AI-driven processes.